Large pre-trained language models have shown promise for few-shot learning, completing text-based tasks given only a few task-specific examples. Will models soon solve classification tasks that have so far been reserved for human research assistants?

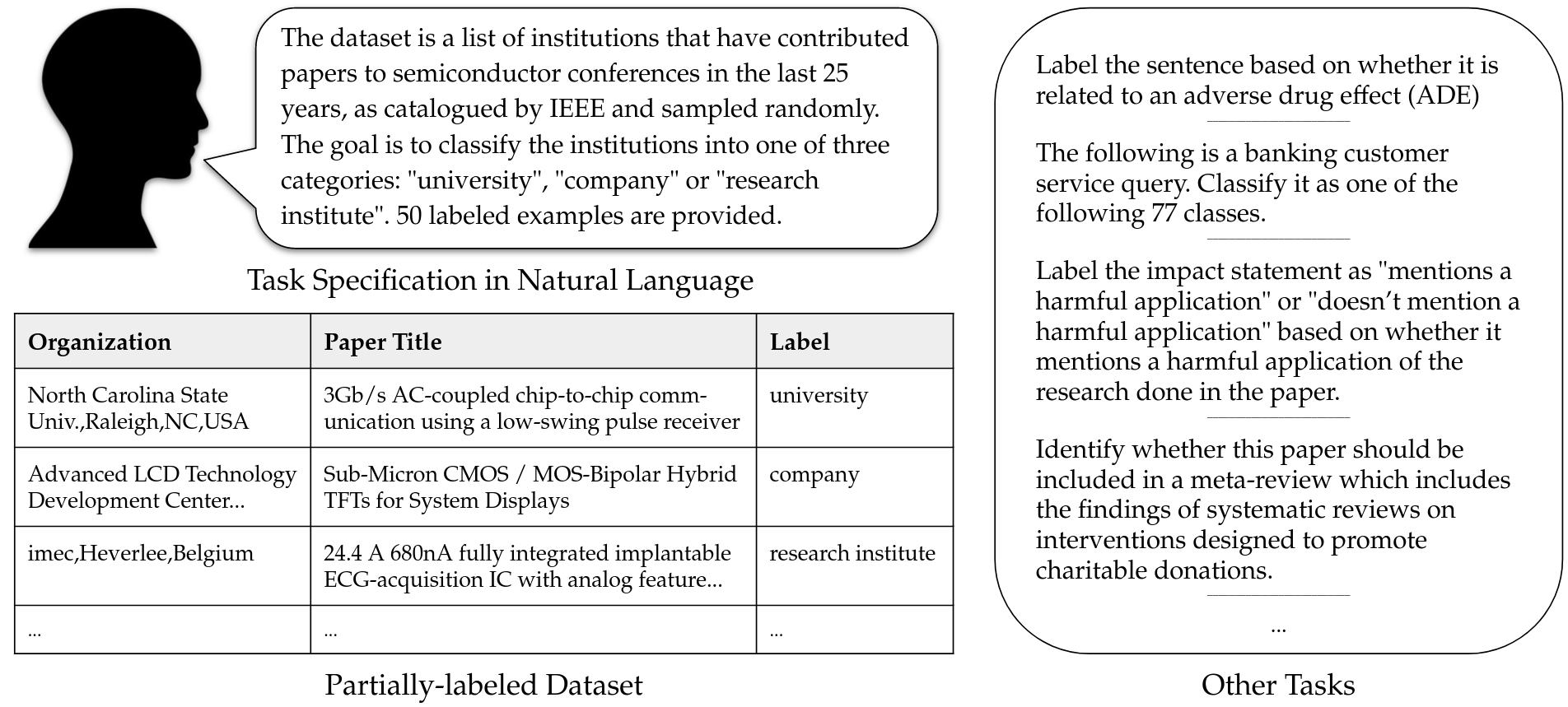

RAFT is a few-shot classification benchmark that tests language models:

- across multiple domains (lit reviews, medical data, tweets, customer interaction, etc.)

- on economically valuable classification tasks (someone inherently cares about the task)

- with evaluation that mirrors deployment (50 labeled examples per task, info retrieval allowed, hidden test set)

Resources:

- Dataset

- Submission

- Leaderboard

- Paper (NeurIPS 2021)

We’re considering a successor, RAFT 2. You can participate as co-author by submitting a dataset.